Digital humans

AI is ushering in a new era where creativity and business strategy intersect.

Learn how natural language computing combined with AI-driven

UX can lead to a quantum leap in customer experience.

Product considerations

How can you differentiate a conversational AI in a commoditized chatbot market that is heating up?

How might we offer a broader business opportunity to our partners and clients?

How might we address the consumer market?

How might we make our experiences the most natural they can be?

Digital humans can reflect empathy, express emotion, and take action – delivering help where and when needed.

Send & receive video calls with a digital colleague.

Available on iOS, Android, and mobile web.

Joining IPsoft

When I joined IPsoft in 2016, the company had a rudimentary user experience and was predominantly run by the engineering team, their first iteration of AI algorithms could understand limited conversational norms.

I was asked to build a team to come up with the strategy and execute the design for a customer-facing product. My remit covered two main efforts;

Build a conversational platform.

Enhance Amelia’s conversational language.

Human-like thinking machines

One thing that machines still find challenging today is the ability to demonstrate empathy.

If a machine could deliver empathy at scale, it could make every interaction with a user unique to them, providing personalized service and massive value to the business. But the AI space has struggled, and I think in part because we humans are hard-wired to communicate on many levels, especially using non-verbal methods such as body posture, facial expressions, hand gestures, the intonation and prosody in or voice, the way we breathe, and so on.

For Amelia to get to the next level she would need to mimic many of those aspects.

What would the benefit be of such a human-like thinking machine?

How would you monetize it?

How would you bring it to market?

Amelia v.05

Prior to my joining the company.

Aligning on vision

From my experience with IBM Watson I’ve been obsessed with the idea of bringing digital humans to the market.

IPsoft had a simple offering, was well funded, nimble and fast moving, and the CEO had vision. The company had a history of ‘first’s,’ and had a technical background of automation.

Design thinking was needed to enhance the user experience, we needed to reorient the hiring practices, and articulate a product roadmap that everyone could get behind.

The company needed a Northstar strategy.

If I asked seven different people within the company what Amelia was, I’d get seven different answers. It was clear to me that the company didn’t know what it had in its AI and didn’t have a clear vision of where to take the product.

The 20-year-old B2B focused family-owned company had little experience outside of its own orbit. The corporate dynamics necessitated an approach of building influence among the CEO’s inner circle of VPs in order to drive change.

My focus was to build trust and influence with clients, analysts, and senior stakeholders within the organization, listening to them in order to pull together an aligned vision of the platform and then articulate that vision as clearly as possible so that all efforts could be driven towards a shared goal.

The mission statement

We magnify humanity’s capabilities.

Why – Working together, humans + avatars can magnify humanity’s capabilities.

How – We will surface the know-how that is trapped in people’s minds, making knowledge accessible & actionable where it is needed most.

What – Our avatars will converse with audiences across the globe, enabling trillions of valuable personalized experiences.

Elements of human emulation

Brain

Native Language

Dialect

Persona

Emotional intelligence

Face

Range of emotional expressions

Real-time organic motion

Eye tracking

Facial recognition

Voice

Intonation

Prosody

Pitch

Helpfulness

Knowledge

Sourcing

Validation

Analytics

Sharing

Experience

Processes

Integrations

UX/UI control

Responsive presentation

Evolution of the avatar

2016

When I joined the company Amelia looked like a plastic Barby Doll, and have very limited facial expressions. The poly count was low so that the R&D team could optimize the performance.

(Unity engine - Desktop web only).

2017

After scanning a real person in 3D, we leveraged real texture maps, 4x the poly count and resolution, and included motion capture to improve the gestures and facial expressions.

(Unity engine - Desktop web only).

2017

Moving to the Unreal engine was a game changer in terms of overall fidelity, natural looking motion, and look and feel. We were still encumbered by the high processing cost of running such software locally on the device which impacted lip sync and overall response times.

(Unity engine - Desktop web only).

Behind the scenes

The difference between video game characters, or the CGI characters you see in the movies is that they are hand animated so that every frame looks amazing.

Our digital human needs to be driven by code because it has to react in real-time to what the user is saying. There is no way to pre-render, which makes it much more difficult to get human levels of fidelity - what’s known in the business as passing the Turing test - making someone think the ‘robot’ is human. As you get closer to this ‘uncanny valley’ it becomes exponentially harder to maintain the illusion of being human.

AI-powered expressions

Early motion capture driven test, with test voice.

Here is an early work in progress behind the scenes image of Amelia as a full-body 3D avatar. The background image is a map of the IPsoft HQ floor. We are working on obstacle avoidance so that Amelia can walk the floors. We also have NFC enabled, so that Amelia can address employees and guests by name, (based on their office pass). Coming to an Oculus soon!

Make it compelling

Real-time render of the Ai Amelia.

Amelia install’s and configures itself, presenting options to the client

Behind-the-scenes

While the rigging & construction of the avatar is nothing new, the fact that it reacts to text or voice conversations in real-time is. A separate AI drives the facial animations, bringing new levels of empathy to Amelia.

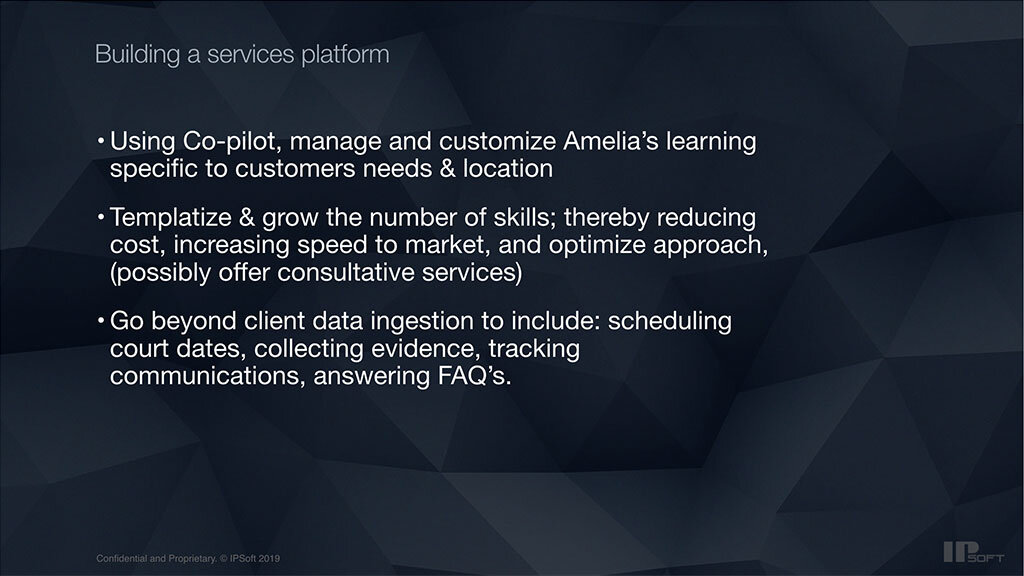

Business opportunity

How does a business benefit from such a service?

They are trained in specific job functions, working at machine speeds at scale.

They integrate into systems of record to have up to date information on hand at all times.

Data from every interaction will enable innovation.

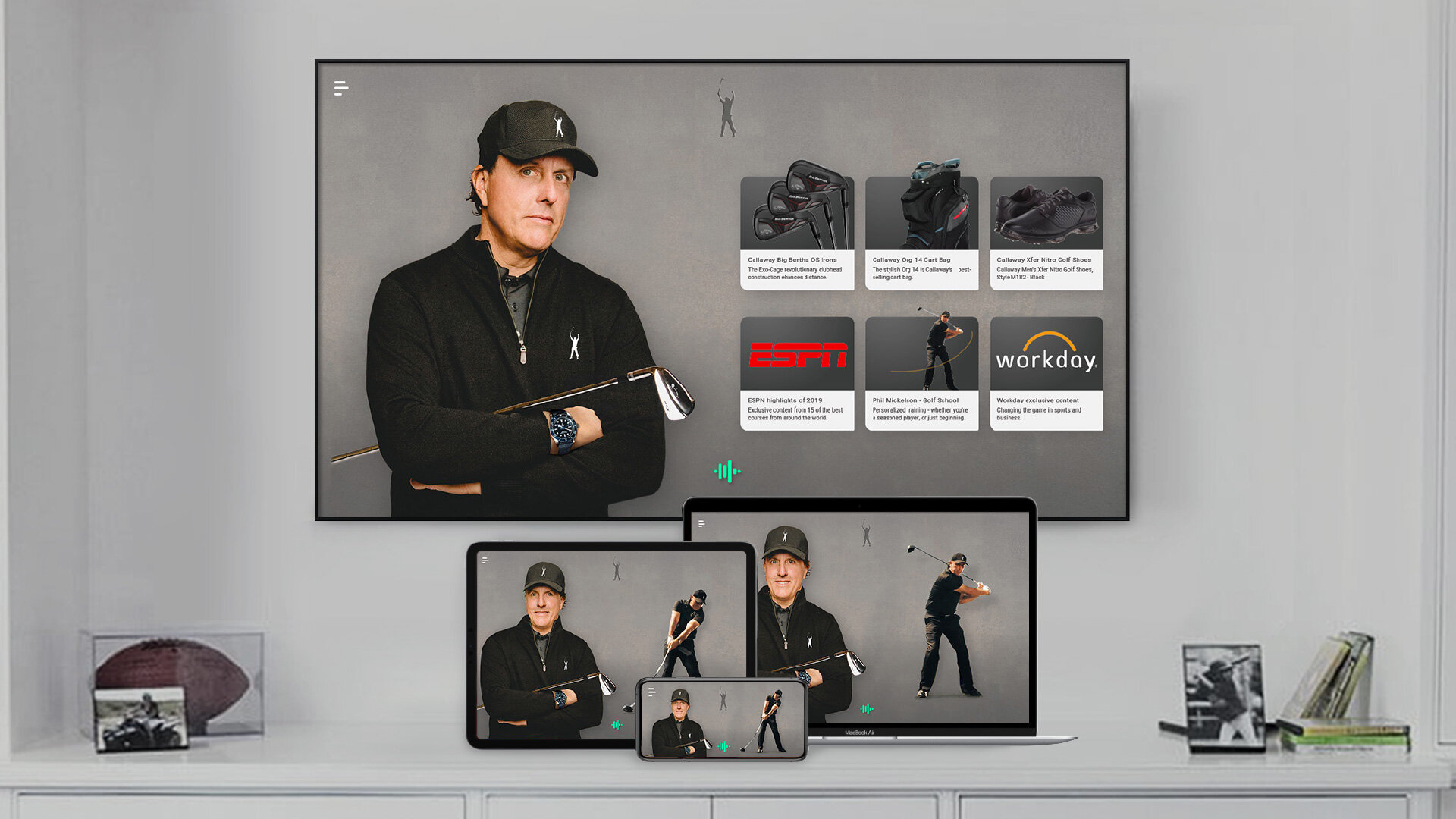

Advertisers and content providers can supplement the avatar experience.

How does a digital human engage with a user?

They communicate like humans (but never go off script), in over 100 languages.

The appearance & voice can be fully customized.

Digital humans as a service

Digital humans can be used in numerous verticals for internal & external roles.

Enabling clients to build and maintain their own digital human requires a multi-discipline team of technologists, data scientists, digital coaches, linguists, UX designers and customer service teams. I set up training, documentation, and built the team to deliver consulting services to clients, helping them identify use cases, and conduct roadmap planning.

We launched a new product called Co-pilot, an application designed to help train digital humans while concurrently supporting existing customer service groups with new powerful tools. These tools help organizations retain and codify knowledge, improve efficiency, train their employee’s on effective methods, and most importantly generate analytics that allows them to constantly optimize their business.

Creativity can enhance customer service, creating unique and differentiated services and capabilities. Brands can employ their advertising agency’s copy writers to help maintain the conversational language of their digital ambassador, extending their brand archetype across all touch points. This new approach means branding can be carried through the line from TV commercials to customer support phone calls with a consistent tone of voice.

The company used the kiosk internally for several roles - this one welcomes guests at the entrance of the innovation center.

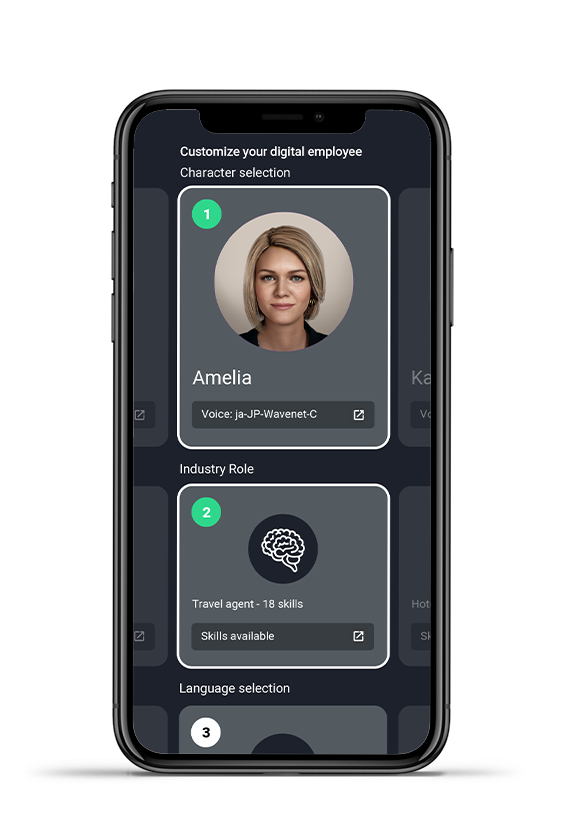

How do you hire a digital human?

We came up with the idea of having a store like experience where clients could customize their own digital employees.

The digital human store would allow 3rd party partners to upload pre-trained knowledge modules that were based on their subject matter expertise.

Customers can pick from a collection of skills and roles, select a face (either a 2D or 3D avatar), and then pick the languages they’d like it to be fluent in.

Select an avatar, role, and language…

The doctor can really see you now

Doctors wish they could spend quality time with their patients, but paperwork means they have limited time to focus on what matters most. With a human like digital assistant patients can share more data without without fear of being judged, medical staff can spend less time dealing with paperwork, insurance companies get more detailed notes, and the AI assistant can follow up after the appointment - making time with the doctor more efficient.